LLMs are neural networks of the transformer architecture, technically composed of two units that work in tandem, the word embedder and the decoder.

The word embedder converts words into numbers, metafares them from the world of human language to the world of computer information.

The second part is called ‘decoder’ for historical reasons,1 but I shall refer to if from now on with the more descriptive generator, as it generates new data from existing data. The generator is a multilayer perceptron (described in the next post) with a self-attention mechanism (described later on) on top. The generator yields the next word given the previous words, thus generating new text. More exactly, it takes the numbers that the word embedder converted words into and yields new numbers, which the word embedder converts back to text.

These two parts are separate technically and conceptually. They can and often are trained separately as well. Either can function in isolation, but to little avail. Word embedders, while not exactly ‘useful’ on their own, can entertain humans who marvel at the inanimate which behaves according to linguistic intuitions. It turns words into numbers, and numbers can be manipulated mathematically, altogether enabling one to construct equations such as ‘king - man + woman =’ which yields ‘queen’ as the solution.2

The generator, on the other hand, would be completely alien to us without the intermediary word embedder. It can do computations on numbers, on m by n-sized matrices, but to what end..? A thought experiment for the kind reader: had an alien civilization got its hands on the generator part of ChatGPT or another LLM without the word embedder part, like some broken outer-space-faring Rosetta stone, would its members be able to reconstruct a word embedder that translated the numbers into their own alien language and thereby allow them to learn anything about the human species, its earth or culture? I'd say the answer to this contains also the answer to the question whether ChatGPT really ‘understands’/ ‘knows‘ anything.

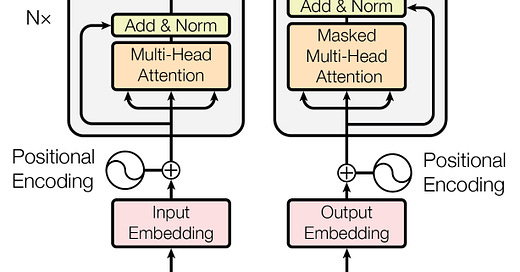

The figure shows the Transformer architecture as first published. The left half is the encoder, the right is the decoder/ generator. The transformer generates one translated word at a time. The Inputs at the bottom refers to the entire to-be-translated text, the Outputs (shifted right) to the already translated text. The Nx alludes to the grey boxed structure being repeated an arbitrary amount of times (‘n times’). The blue Feed Forward unit is equivalent to a multilayer perceptron. The peach Multi-Head Attention is the self-attention mechanism. The pink Input/Outer Embedding are word embedders. All these will be discussed in the following posts. The yellow Add & Norm fulfills a technical function that will no be discussed in this series.

The transformer architecture was first used for translation tasks. In addition to a word embedder and a decoder it had an encoder. The encoder would be fed embedded French sentences, for example, and encode them into an internal representation. The decoder would be fed the encoder's output and ‘decode it back’ into English. The LLMs we are concerned with have dispensed with the encoder — even though, in effect, they can still translate.

There's the 2024 browser game Infinite Craft in which the player drags words onto each other to combine them, i.e. wind + earth = dust, where the result is computed by an LLM. The game has gained popularity. I can't help but see it as capturing in miniature some of the fascination people have with chat bots.